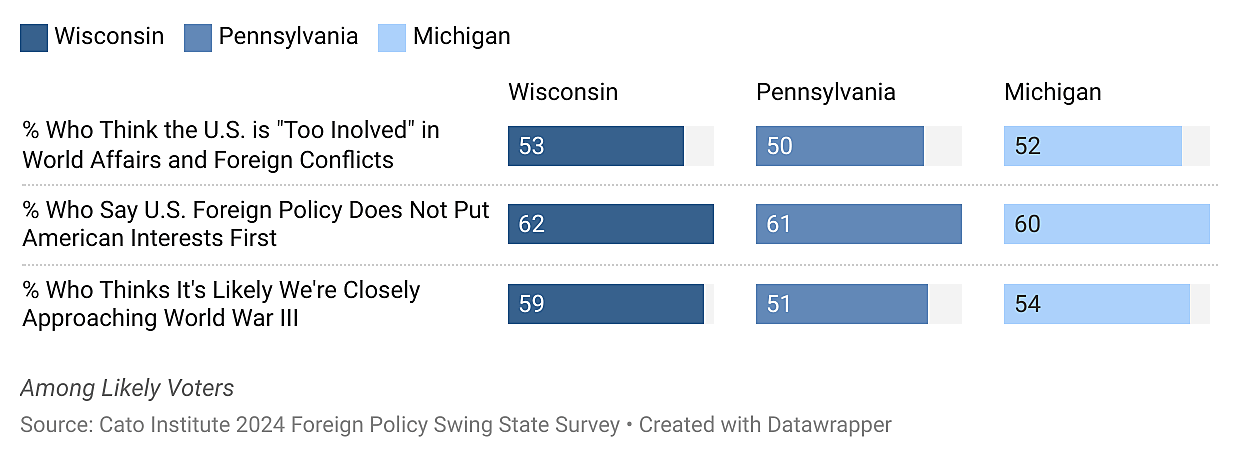

A new Cato Institute survey of 1,500 Americans conducted by YouGov in the crucial swing states of Pennsylvania, Michigan, and Wisconsin finds exhaustion with the current course of US foreign policy. Most say the US is “too involved” in world affairs and global conflicts (WI: 53%, PA: 50%, MI: 52%); majorities say US foreign policy does not put American interests first (WI: 62%, PA: 61%, MI: 60%); and majorities think it’s likely that the US is “closely” approaching World War III (WI: 59%, PA: 51%, MI: 54%).

The survey finds an especially close race between Vice President Kamala Harris and former President Donald Trump, with Harris ahead of Trump in Wisconsin (51% to 46%), Trump slightly ahead of Harris in Michigan (48% to 47%), and the two candidates tied in Pennsylvania with 47% each among likely voters. Taking into consideration margins of error, the candidates are statistically tied in these three states.

With margins this close, public opinion on foreign policy could impact the election outcome. Indeed, slim majorities of likely voters in these three swing states say they are less likely to vote for a candidate who disagrees with them on foreign policy (WI: 52%, PA: 50%, MI: 52%).

Notably, the survey found voters trust Trump more than Harris to handle foreign policy by a margin of 4 percentage points in each state. They believe Trump is more likely to keep Americans out of foreign wars and conflicts (WI: 52%, PA: 51%, MI: 52%), to help end the war in Ukraine (WI: 51%, PA: 50%, MI: 54%), and make foreign policy decisions based on American interests first (WI: 51%, PA: 54%, MI: 56%).

But, these Rust Belt swing state voters also think Trump is more likely than Harris to get the U.S. into World War III (WI: 51%, PA: 51%, MI: 53%).

How could voters prefer Trump’s foreign policy but then worry he could instigate a global war? Voters may make a distinction between what a candidate says about policy and how they perceive that candidate’s judgment and impulsivity.

American Leadership in the World

Majorities of voters in these swing states would like the U.S. to play a “shared” leadership role in the world and global affairs (WI: 57%, PA: 53%, MI: 58%) rather than a dominant leadership role (WI: 38%, PA: 41%, MI: 32%). Few voters want the US to not play any leadership role at all (WI: 5%, PA: 6%, MI: 10%).

Interestingly, although Republicans are much more likely than Democrats to say the US is too involved in global affairs, they are also much more likely than Democrats to say the US should play the “dominant” leadership role in world affairs.

Europe

Majorities of swing state voters believe the war in Ukraine is important for US national security (WI: 65%, PA: 70%, MI: 71%). Pluralities also believe the country’s relationship with Ukraine strengthens the United States (WI: 43%, PA: 37%, MI: 40%). However, they are divided over US handling of the war (WI: 39%, PA: 41%, MI: 40% approve) and sending money and weapons to Ukraine (WI: 49%, PA: 45%, MI: 44% favor). After learning the US has sent Ukraine $170 billion in military aid and equipment, voters become more reluctant to send more. Instead, most want to reduce or stop the amount of aid sent going forward (WI: 50%, PA: 54%, MI: 57%).

Most believe that the war in Ukraine will lead to a broader war in Europe (WI: 59%, PA: 54%, MI: 63%), but most in Wisconsin and Pennsylvania think it’s unlikely that would pull in the United States (WI: 52%, PA: 55%). In Michigan, slightly more think America could get pulled into the war (53%). Nevertheless, Americans would oppose sending money and weapons to Ukraine if it risked getting the US into war.

NATO

Voters in Pennsylvania, Michigan, and Wisconsin have solidly favorable views of NATO (WI: 60%, PA: 56%, MI: 55%). And only a few Americans want to leave the organization (WI: 15%, PA: 13%, MI: 15%). Notably, nearly half of voters say the US should not continue to defend NATO member countries who fail to follow NATO’s rule that they contribute 2% of their GDP to defense (WI: 44%, PA: 52%, MI: 47%).

Swing state voters are more likely to agree that the US should stay in NATO because American involvement is essential to peace and stability in Europe (WI: 71%, PA: 74%, MI: 70%) than that the US should withdraw from NATO because the Europeans are freeriding on US defense (WI: 29%, PA: 26%, MI: 30%).

Middle East

Pluralities of these swing state voters believe that US involvement in the Middle East has done more to worsen America’s national security (WI: 47%, PA: 50%, MI: 49%). Even more, 8 in 10 swing state voters believe the US cannot fix the conflicts happening in the region even if the country devoted more money, soldiers, and resources.

Israel-Hamas War

Eight in 10 swing state voters say that the way Hamas carried out its attack on Israel on October 7, 2023, was unacceptable. However, voters are divided about the way Israel has carried out its response. Eight in 10 swing state voters support an immediate ceasefire in Gaza.

Seven in 10 believe that the Israel-Hamas war in Gaza is important to national security, and most believe the war could lead to a broader war in the Middle East (WI: 73%, PA: 77%, MI: 73%). If a broader war did break out, these voters would support sending military aid and equipment to Israel (WI: 51%, PA: 50%, MI: 44%) but not entering the war itself (WI: 53%, PA: 49%, MI: 48% opposed).

Asia

Majorities of voters in Wisconsin, Michigan, and Pennsylvania believe that US lawmakers pay too little attention to competition with China (WI: 54%, PA: 54%, MI: 62%). While few voters are “very familiar” with the tensions between China and Taiwan (WI: 24%, PA: 25%, MI: 21%), most believe it’s important to America’s national security (WI: 62%, PA: 65%, MI: 63%). If China prevented Taiwan from trading with other countries, about a quarter would want the US not to get involved, two-thirds would want to impose sanctions, and a fifth would send military aid to Taiwan, and only very few would support sending troops (WI: 2%, PA: 1%, MI: 3%). If China invaded Taiwan, about a quarter of voters still would not want to get involved, about half would want to impose sanctions, about a third would want to send money and equipment, and few would want to go to war (WI: 8%, PA: 8%, MI: 9%).

Mexico

Majorities of swing state voters support using military force to combat drug cartels in Mexico (WI: 55%, PA: 55%, MI: 51%). However, if the Mexican government opposed our involvement, then majorities would oppose sending US troops to combat drug cartels (WI: 67%, PA: 64%, MI: 63%).

Methodology

The Cato Institute 2024 Foreign Policy Swing State Survey was designed and conducted by the Cato Institute in collaboration with YouGov. YouGov collected responses of Americans in Pennsylvania, Michigan, and Wisconsin online August 15 — 23, 2024, from samples of 500 in each state. Restrictions are put in place to ensure that only the people selected and contacted by YouGov are allowed to participate. The margins of error are WI: +/- 5.07; PA: +/- 4.99; MI: +/- 5.03. Among likely voters it is WI: +/- 5.92, PA: +/- 5.93, MI: +/- 5.95 at the 95% level of confidence

The topline questionnaire and survey methodology can be found here (PDF) (XLS) and crosstabs can be found here (XLS). If you would like to speak to Dr. Ekins about the poll’s results please contact pr@cato.org or call 202–789‑5200.