You Ought to Have a Look is a feature from the Center for the Study of Science posted by Patrick J. Michaels and Paul C. (“Chip”) Knappenberger. While this section will feature all of the areas of interest that we are emphasizing, the prominence of the climate issue is driving a tremendous amount of web traffic. Here we post a few of the best in recent days, along with our color commentary.

—

Badges? Do we need these stinking badges?

Need, perhaps not, but apparently some of us actually want them and will go to lengths to get them. We‘re not talking about badges for say, for example, being a Federal Agent At-Large for the Bureau of Narcotics and Dangerous Drugs:

(source: Smithsonianmag.org)

But rather badges like these, being given out by the editors of Psychological Journal for being a good data sharer and playing well with others:

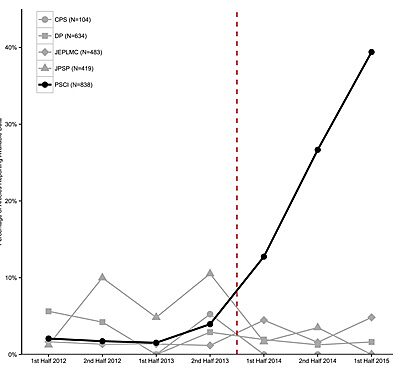

A new paper, authored by Mallory Kidwell and colleagues, examined the impact of the Psychological Journal’s badge/award system and found it to be quite effective at getting authors to make their data and material available to others via an open access repository. Compared with four “comparison journals,” the implementation of the badge system at Psychological Journal led to a rapidly rising rate of participation and level of research transparency (Figure 1).

Figure 1. Percentage of articles reporting open data by half year by journal. Darker line indicates Psychological Science, and dotted red line indicates when badges were introduced in Psychological Science and none of the comparison journals. (Source: Kidwell et al., 2016).

Why is this important? They authors explain:

Transparency of methods and data is a core value of science and is presumed to help increase the reproducibility of scientific evidence. However, sharing of research materials, data, and supporting analytic code is the exception rather than the rule. In fact, even when data sharing is required by journal policy or society ethical standards, data access requests are frequently unfulfilled, or available data are incomplete or unusable. Moreover, data and materials become less accessible over time. These difficulties exist in contrast to the value of openness in general and to the move toward promoting or requiring openness by federal agencies, funders, and other stakeholders in the outcomes of scientific research.

For an example of data protectionism taken to the extreme, we remind you of the Climategate email tranche, where you’ll find gems like this:

“We have 25 or so years invested in the work. Why should I make the data available to you, when your aim is to try and find something wrong with it.”

-Phil Jones email Feb. 21, 2005

This type of attitude, on display throughout the Climategate emails, makes the need for a push for more transparency plainly evident.

Kidwell et al. conclude that the badge system, as silly as it may seem, actually works quite well:

Badges may seem more appropriate for scouts than scientists, and some have suggested that badges are not needed. However, actual evidence suggests that this very simple intervention is sufficient to overcome some barriers to sharing data and materials. Badges signal a valued behavior, and the specifications for earning the badges offer simple guides for enacting that behavior. Moreover, the mere fact that the journal engages authors with the possibility of promoting transparency by earning a badge may spur authors to act on their scientific values. Whatever the mechanism, the present results suggest that offering badges can increase sharing by up to an order of magnitude or more. With high return coupled with comparatively little cost, risk, or bureaucratic requirements, what’s not to like?

The entire findings of Kidwell et al. are to be found here, in the open access journal PLOS Biology—and yes, they’ve made all their material readily available!

Another article that caught our eye this week provides further indication why transparency in science is more necessary than ever. In a column in Nature magazine, Daniel Sarewitz, suggests that the pressure to publish has the tendency to result in lower quality papers through what he describes as “a destructive feedback between the production of poor-quality science, the responsibility to cite previous work and the compulsion to publish.” He cites and example of a contaminated cancer cell line that gave rise to hundreds of (wrong) published studies which receive over 10,000 citations per year. Sarewitz points to the internet and other search engines for the hyper citations which make doing literature searches vastly easier than the old days, which required a trip to the library stacks and lots of flipping through journals, etc. Now, not so much.

Sarewitz doesn’t see this rapid expansion of the scientific literature and citation numbers as a good trend, and offers an interesting way out:

More than 50 years ago, [it was] predicted that the scientific enterprise would soon have to go through a transition from exponential growth to “something radically different”, unknown and potentially threatening. Today, the interrelated problems of scientific quantity and quality are a frightening manifestation of what [was foreseen]. It seems extraordinarily unlikely that these problems will be resolved through the home remedies of better statistics and lab practice, as important as they may be. Rather, they would seem …to announce that the enterprise of science is evolving towards something different and as yet only dimly seen.

Current trajectories threaten science with drowning in the noise of its own rising productivity… Avoiding this destiny will, in part, require much more selective publication. Rising quality can thus emerge from declining scientific efficiency and productivity. We can start by publishing less, and less often…

With the publish or perish culture securely ingrained in our universities, coupled with evaluation systems based on how often your research is cited by others, its hard to see Sarewitz’s suggestion taking hold anytime soon, as good as it may be.

In the same vein is this article by Paula Stephan and colleagues titled “Bias against novelty in science: A cautionary tale for users of bibliometric indicators.” Here the authors make the point that:

There is growing concern that funding agencies that support scientific research are increasingly risk-averse and that their competitive selection procedures encourage relatively safe and exploitative projects at the expense of novel projects exploring untested approaches. At the same time, funding agencies increasingly rely on bibliometric indicators to aid in decision making and performance evaluation.

This situation, the authors argue, depresses novel research and instead encourages safe research that supports the status quo:

Research underpinning scientific breakthroughs is often driven by taking a novel approach, which has a higher potential for major impact but also a higher risk of failure. It may also take longer for novel research to have a major impact, because of resistance from incumbent scientific paradigms or because of the longer time-frame required to incorporate the findings of novel research into follow-on research…

The finding of delayed recognition for novel research suggests that standard bibliometric indicators which use short citation time-windows (typically two or three years) are biased against novelty, since novel papers need a sufficiently long citation time window before reaching the status of being a big hit.

Stephan’s team ultimately concludes that this “bias against novelty imperils scientific progress.”

Does any of this sound familiar?