You Ought to Have a Look is a feature from the Center for the Study of Science posted by Patrick J. Michaels and Paul C. (“Chip”) Knappenberger. While this section will feature all of the areas of interest that we are emphasizing, the prominence of the climate issue is driving a tremendous amount of web traffic. Here we post a few of the best in recent days, along with our color commentary.

—

What’s lost in a lot of the discussion about human-caused climate change is not that the sum of human activities is leading to some warming of the earth’s temperature, but that the observed rate of warming (both at the earth’s surface and throughout the lower atmosphere) is considerably less than has been anticipated by the collection of climate models upon whose projections climate alarm (i.e., justification for strict restrictions on the use of fossil fuels) is built.

We highlight in this issue of You Ought to Have a Look a couple of articles that address this issue that we think are worth checking out.

First is this post from Steve McIntyre over at Climate Audit that we managed to dig out from among all the “record temperatures of 2015” stories. In his analysis, McIntyre places the 2015 global temperature anomaly not in real world context, but in the context of the world of climate models.

Climate model-world is important because it is in that realm where climate change catastrophes play out, and that influences the actions of real-world people to try to keep them contained in model-world.

So how did the observed 2015 temperatures compare to model world expectations? Not so well.

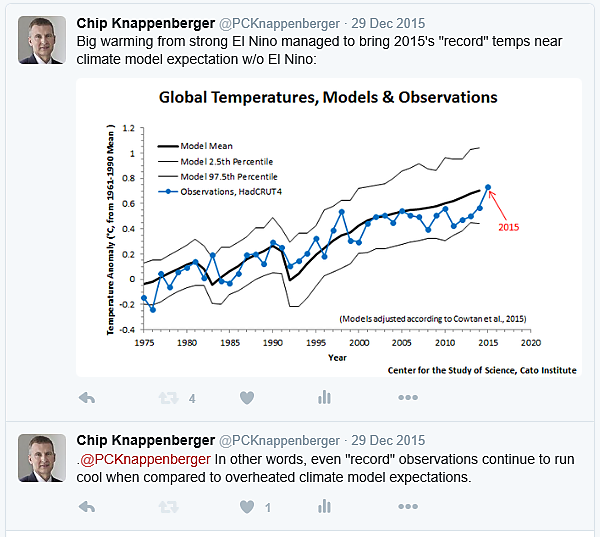

In a seriesoftweets over the holidays, we pointed out that the El Niño-fueled, record-busting, high temperatures of 2015 barely reached to the temperatures of an average year expected by the climate models.

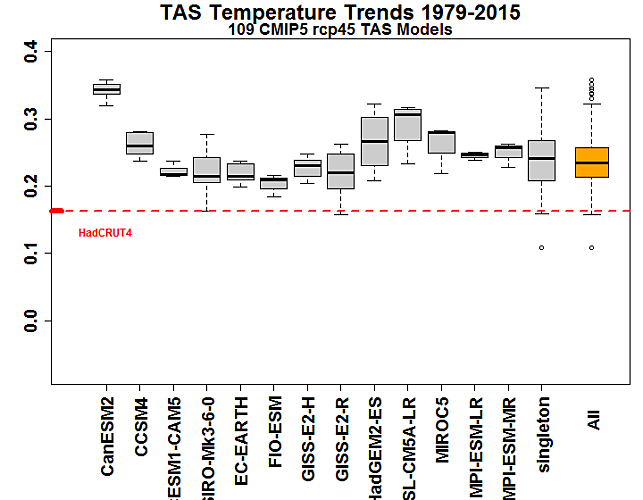

In his post, unconstrained by Twitter’s 140-character limit, McIntyre takes a bit more verbose and detailed look at the situation, and includes additional examinations of the satellite record of temperatures in the lower atmosphere as well as a comparison of observed trends and model expected trends in both the surface and lower atmospheric temperatures histories since 1979.

The latter comparison for global average surface temperatures looks like this, with the observed trend (the red dashed line) falling near or below the fifth percentile of the expected trends (the lower whisker) from a host of climate models:

McIntyre writes:

All of the individual models have trends well above observations… There are now over 440 months of data and these discrepancies will not vanish with a few months of El Nino.

Be sure to check out the whole article here. We’re pretty sure you won’t read about any of this in the mainstream media.

Next up is an analysis by independent climate researcher Nic Lewis, who’s specialty these days is developing estimates of the earth’s climate sensitivity (how much the earth’s average surface temperature is expected to rise under a doubling of the atmospheric concentrations of carbon dioxide) based upon observations of the earth’s temperature evolution over the past 100–150 years. Lewis’s general findings are that the climate sensitivity of the real world is quite a bit less than it is in model world (a reason that could explain much of what McIntyre reported above).

The current focus of Lewis’s attention is a recently published paper by a collection of NASA scientists, led by Kate Marvel, that concluded observational-based estimates of the earth climate sensitivity, such as those performed by Lewis, greatly underestimate the actual sensitivity. After accounting for the reasons why, Marvel and her colleagues conclude that climate models are, contrary to the assertions of Lewis and others, accurately portraying how sensitive the earth’s climate is to changing greenhouse gas concentrations (see here for details). It thus follows that these models serve as reliable indicators of the future evolution of the earth’s temperature.

As you may imagine, Lewis isn’t so quick to embrace this conclusion. He explains his reasons why in great detail in his lengthy (technical) article posted at Climate Audit, and provides a more easily digestible version over at Climate Etc.

After detailing a fairly long list of inconsistencies contained not only internally within the Marvel et al. study itself, but also between the Marvel study and other papers in the scientific literature, Lewis concludes:

The methodological deficiencies in and multiple errors made by Marvel et al., the disagreements of some of its forcing estimates with those given elsewhere for the same model, and the conflicts between the Marvel et al. findings and those by others – most notably by James Hansen using the previous GISS model, mean that its conclusions have no credibility.

Basically, Lewis suggests that Marvel et al.’s findings are based upon a single climate model (out of several dozen in existence) and seem to arise from improper application of analytical methodologies within that single model.

Certainly, the Marvel et al. study introduced some interesting avenues for further examination. But, despite how they’ve been touted–as freshly-paved highways to the definitive conclusion that climate models are working better than real-world observations seem to indicate–they are muddy, pot-holed backroads leading nowhere in particular.

Finally, we want to draw your attention to an online review of a paper recently published in the scientific literature which sought to dismiss the recent global warming “hiatus” as nothing but the result of a poor statistical analysis. The paper, “Debunking the climate hiatus,” was written by a group of Stanford University researchers led by Bala Rajaratnam and published in the journal Climatic Change last September.

The critique of the Rajaratnam paper was posted by Radford Neal, a statistics professor at the University of Toronto, on his personal blog that he dedicates to (big surprise) statistics and how they are applied in scientific studies.

In his lengthy, technically detailed critique, Neal pulls no punches:

The [Rajaratnam et al.] paper was touted in popular accounts as showing that the whole hiatus thing was mistaken — for instance, by Stanford University itself.

You might therefore be surprised that, as I will discuss below, this paper is completely wrong. Nothing in it is correct. It fails in every imaginable respect.

After tearing through the numerous methodological deficiencies and misapplied statistics contained in the paper, Neal is left shaking his head at the peer-review process that gave rise to the publication of this paper in the first place, and offered this warning:

Those familiar with the scientific literature will realize that completely wrong papers are published regularly, even in peer-reviewed journals, and even when (as for this paper) many of the flaws ought to have been obvious to the reviewers. So perhaps there’s nothing too notable about the publication of this paper. On the other hand, one may wonder whether the stringency of the review process was affected by how congenial the paper’s conclusions were to the editor and reviewers. One may also wonder whether a paper reaching the opposite conclusion would have been touted as a great achievement by Stanford University. Certainly this paper should be seen as a reminder that the reverence for “peer-reviewed scientific studies” sometimes seen in popular expositions is unfounded.

Well said.