After an influx of new users, Parler, a social media platform offered as liberally governed alternative to Twitter, implemented a few basic rules. These rules prompted frustration and gloating from the platform’s respective fans and skeptics, but Parler is simply advancing along a seemingly inevitable content moderation curve. Parler’s attempt to create a more liberal social media platform is commendable, but the natural demands of moderation at scale will make this commitment difficult to keep.

Parler was initially advertised as moderated in line with First Amendment precedent and Federal Communications Commission broadcast guidelines, which differ considerably from each other. However, the platform’s terms of service reserve the right to “remove any content and terminate your access to the Services at any time and for any reason or no reason,” and include a provision requiring users to cover Parler’s legal fees in suits over user speech. While the latter section is unlikely to hold up in court, the entirely standard reservation of the right to exclude was seen as a betrayal of Parler’s promise of an unobstructed platform for free speech.

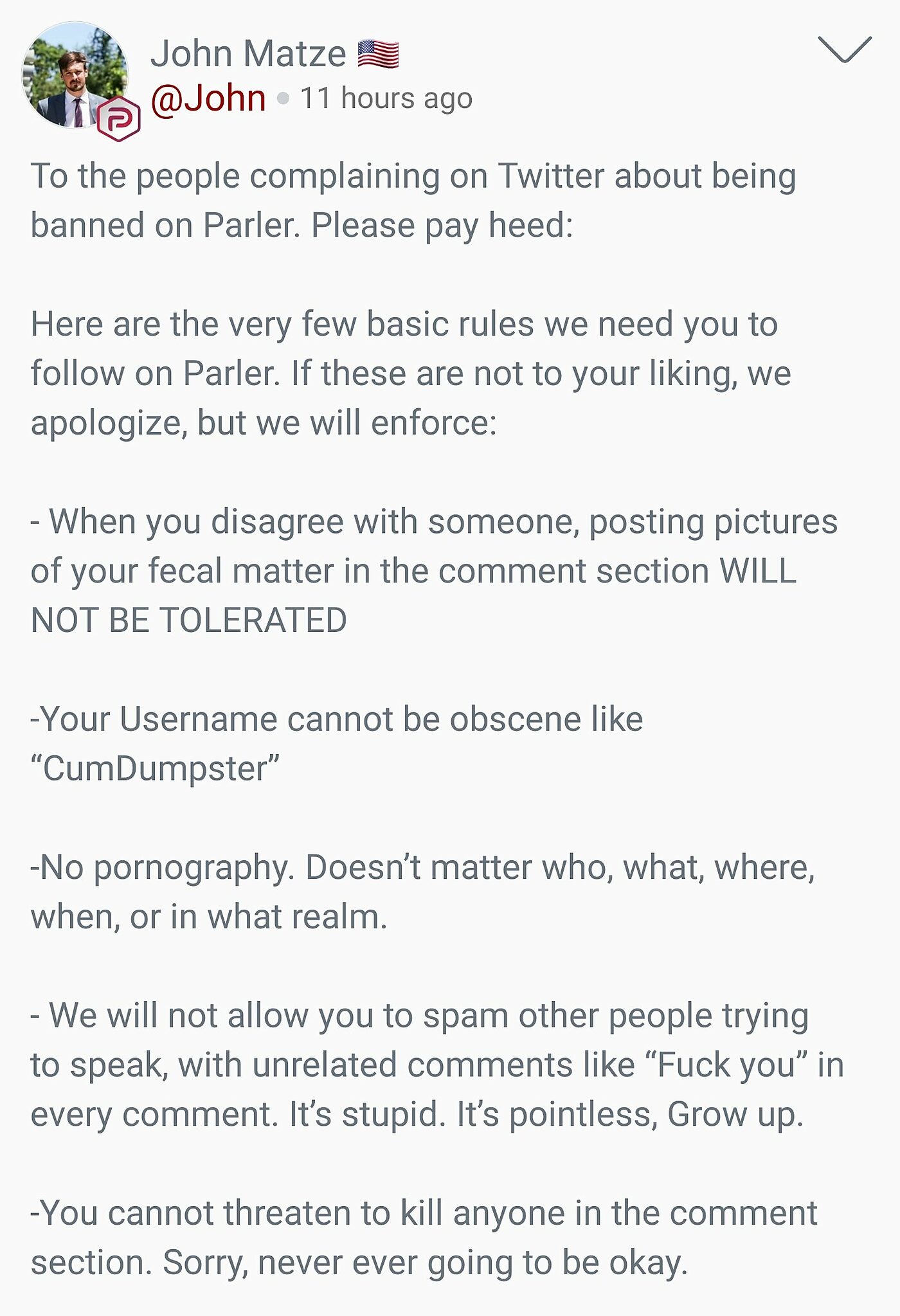

Parler’s decision to ban trolls who had begun impersonating MAGA celebrities and implement a few rules which, while quite limited, went beyond what might be expected of a First Amendment standard, contributed to the perception of hypocrisy.

Although these rules may prohibit what the First Amendment would protect, they are largely in keeping with Parler’s more implicit founding promise; not to be a place for all speech, but to offer a home for conservatives put off by Twitter’s ostensibly overbearing moderation. Parler’s experience illustrates the impossibility and undesirability of preventing alleged bias by governing differing social media platforms under a one-size fits all First Amendment standard. For those who imagined that Parler would strictly apply the First Amendment, or that such a platform would be practicable or manageable, the developments were disappointing. However, for those who simply want an alternative to Twitter that governs with conservative values in mind, Parler’s growing pains present a difficult but viable path forward. Parler’s incentives to moderate will only increase as it grows, and scale will make transparent, context-aware moderation all the more difficult.

Platforms usually develop new policies in response to crises or unforeseen, unwanted platform uses. One of the first recorded user bans, within a text based multiplayer game called LambdaMOO, came in response to the unanticipated use of a voodoo doll item to narrate obscene actions by other players. After the livestreamed Christchurch shooting, Facebook placed new restrictions of Facebook live use. These changes can be substantial, Reddit once committed to refrain from removing “distasteful” subreddits but changed its stance in response to user communities dedicated to violence against women and upskirt photos.

Because Parler is a small, young platform, the sorts of outrageous, low probability events that have driven rule changes and moderation elsewhere just haven’t happened there yet. This week saw the first of these rule-building incidents. Trolls hoping to test the boundaries of Parler’s commitment to free speech and get a rise out of its users began, often vulgarly, impersonating prominent Trump supporters and conservative publications. Whether recent banning of Chapo Trap House subreddit contributed to the number of bored leftists trolling Parler is an interesting, but probably unanswerable question. Drawing a line between parody and misleading impersonation is a problem that has long bedeviled content moderators. Twitter has developed policies governing impersonation, as well as rules for parody and fan accounts. Twitter may have drawn its lines imperfectly, but it drew them in light of a decade of experience with novel forms of impersonation.

Parler responded by banning the impersonating accounts, including some inoffensive parodies. Devin Nunes’ Cow, a long running Twitter parody account and the subject of a lawsuit by Rep. Nunes (R‑CA) against Twitter, followed the Congressman to Parler and was subsequently banned. The fact that Parler has responded in such a blunt fashion is unsurprising. As a small platform, they have limited moderation resources to address impersonation claims, and the vitality of their network somewhat relies upon the presence of prominent Trump supporters like Rep. Nunes. While the platform has called for volunteer moderators, likening the work to service on a jury, this may introduce biases of its own.

Banning these sorts of impersonators is unlikely to upset Parler’s core userbase of Twitter-skeptical conservatives. A seeming blanket ban of Antifa supporters from the platform, announced by founder John Matze, might be similarly understood as an antiharassment measure. However, if the platform’s governance is beholden to the tastes of a specific community or the whims of its founders it may have difficulty attracting a wider audience and maintaining some sort of First Amendment standard. These are not unique problems: Pinterest has long labored to shed the perception that it’s just for women, while content delivery network Cloudflare struggled with ramifications of its founder’s unilateral ability to remove hateful content such as the white supremacist site Stormfront. Parler may simply aspire to be a social media platform for conservatives. Niche platforms like JDate, a Jewish dating site, and Ravelry, a social network for knitters, have undoubtedly been successful. If Parler pursues this path, it will provide a safe space for Trump supporters, but won’t have the level of cultural impact enjoyed by Twitter. It may also simply be boring if it becomes an ingroup echo chamber without opportunities for engagement, conversation, or conflict with ideological others.

Parler is working to move beyond some of its small platform problems. Matze’s profile now includes the line “Official Parler statements come from @Parler,” And the platform issued its first set of community guidelines. The guidelines illustrate the unavoidable tensions between Parler’s desire to utilize a First Amendment standard, and create a social media platform that is both governable and enjoyable. In many cases, the guidelines make reference to specific Supreme Court precedent, clearly trying to mirror the strictures of the First Amendment, however, they also include a blanket prohibition on spam, which, while a bedrock norm of the internet, has no support in First Amendment caselaw. In practice, reliance on areas of law that have essentially been allowed to lie fallow, often to the frustration of conservatives, produces vague guidance such as: “Do not use language/visuals that are offensive and offer no literary, artistic, political, or scientific value.” In time, Parler will need offer more specific rules for its platform, rules applicable at scale while still reflective of its values. As a model for social media, a First Amendment standard with additions driven by contingency may work well. But in time, it may look more like the rules of other platforms than intended and will ultimately reflect the fact that the First Amendment is made practicable by the varied private rules layered on top of it.

As Parler grows, it will face the large platform problem of enforcing its rules at scale. This is a big platform problem. Parler may be able to derive a standard of obscenity that works for its current userbase, but new users and communities will discover new ways to contest it. Millions of users will find more ways to use and misuse a platform than hundreds of thousands of users, and network growth usually outstrips increases in moderation capacity. Moderators will have to make more moderation decisions in less time, and often with less context. If Parler hosts multiple communities of interest, it will need to understand their distinct cultures and idiosyncrasies of language and mediate between their different norms.

While a larger Parler may hope to maintain a liberal or constitutional approach to moderation, this will become difficult when, at any moment, thousands of people are using the platform to sell stolen antiquities, violate copyright, and discuss violence in languages its moderators don’t understand. Add the pressure of the press and politicians demanding that the platform do something or face nebulous regulation, and liberalism becomes harder to maintain. In the face of these novel problems of scale, platforms with different purposes and structures all seem to suffer from opaque decision making and overreliance on external expertise. Even if they wish to govern differently, they don’t have time to explain every moderation decision or the in-house expertise to understand every new unanticipated misuse. Individual users also matter less when platforms have millions or billions of them. While this frees platforms from reliance on particular superusers, it erodes the feedback loops that help to rein in overbroad moderation.

Parler’s early commitment to free speech, and the charges of hypocrisy which attended its initial rulemaking, may help it to resist later demands for increased moderation. However, platforms have long gone back on earlier assurances of liberal governance: over the past decade, Reddit went from maintaining a commitment to refrain from removing subreddits, to removing hundreds of subreddits in a single day. While Parler hopes to resist the novel demands of moderation at scale, other platforms have been unable to do so. Though they may yet succeed, it is likely that these constraints are structural, and will only be surmounted by a commitment to decentralization in the architecture and code of the platform itself. Human commitments, even commitments made with the best of intentions, are simply too vulnerable to the unanticipated pressures of governance at scale.