It’s been a day since the disappointing “Nation’s Report Card” results came out, and it has given me a chance to crunch some numbers a bit. They don’t tell us anything definitive – there is a lot more that impacts test scores than a policy or two – but it is worth seeing if there are any patterns that might bear further analysis, and it is important to explore emerging theories.

Not surprisingly, while many observers have been rightly hesitant to make grand pronouncements about what the scores mean, some theories revolving around the Common Core have come out. The one I’ve seen the most, coming from people such as U.S. Secretary of Education Arne Duncan and Karen Nussle of the Core-defending Collaborative for Student Success, is that the Core will bring great things, but transitioning to it is disruptive and we should expect to see short-term score drops as a result.

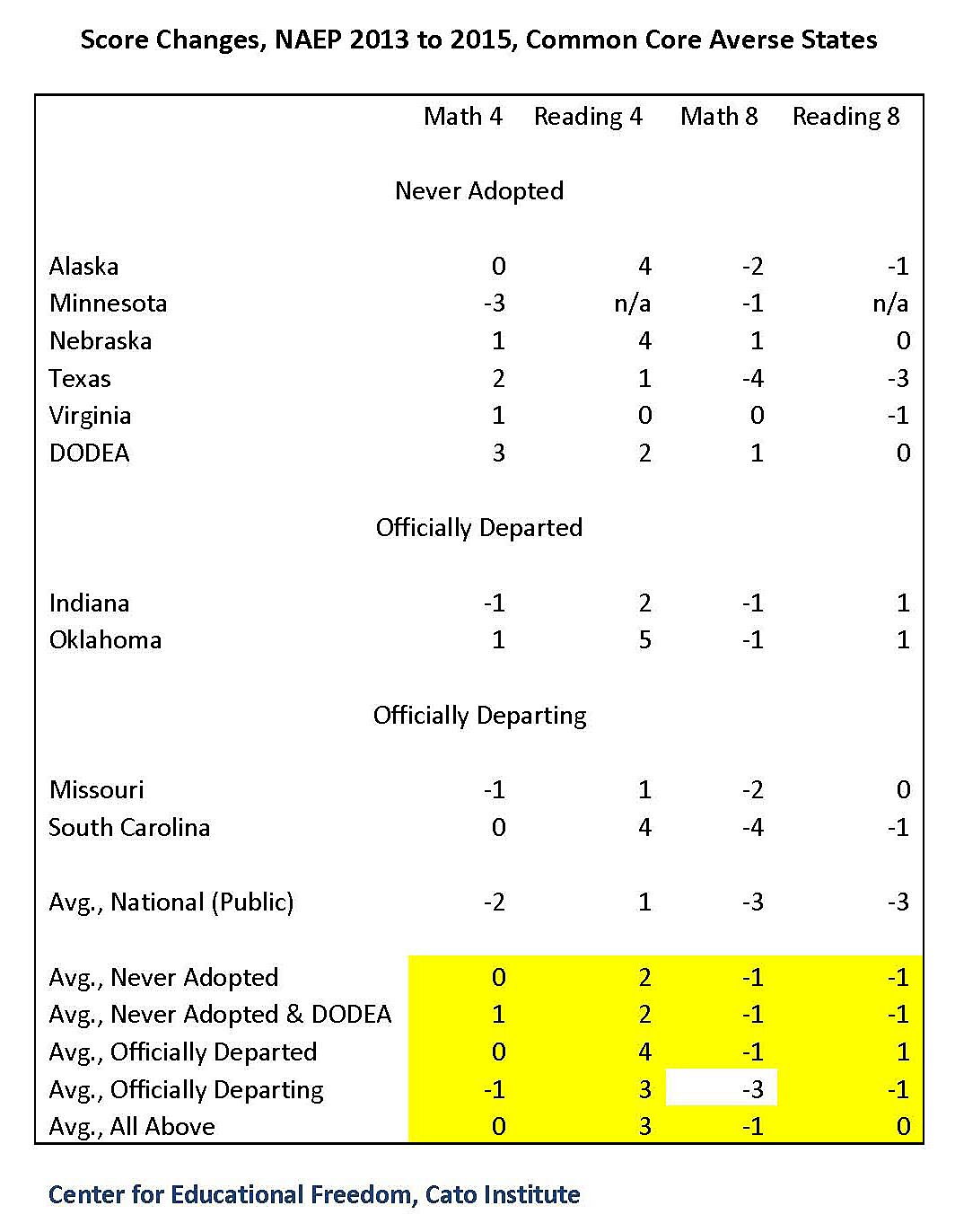

That is plausible, and we can test it a bit by looking at the performance of states (and the Department of Defense Education Activity) that have demonstrated some level of what I’ll call Core aversion. Those are states that (1) hadn’t adopted the Core at the time of the NAEP test; (2) had adopted but had moved away by testing time; and (3) were still using the Core at test time but officially plan to move away. They are broken down in the following table, which uses score changes in the charts found here:

What we see in the highlighted area is that, with the exception of eighth grade math scores for states that will be departing the Core but were still in it as of NAEP testing, average score changes were better in all four testing categories for Core-averse states than the national average.

That the states that never adopted the Core outperformed the average could indicate that the disruption theory is correct: foregoing the transition to the Core enabled better performance. Of course, it could also be that the Core standards are less effective at boosting NAEP scores than what Core-averse states are using. Or that those states had better economies over the last two years. Or many other possibilities.

The problem for the disruption theory is that the states that adopted the Core but then dropped it likely underwent greater disruption than states that have been consistently working on the Core. Indeed, Michael Petrilli of the Core-supporting Fordham Institute said that Oklahoma was plunged into “chaos” when it abandoned the Core in May 2014. Yet the Core-dropping states performed better than the national average. It is also likely that the two states that announced they would be leaving the Core but were still in it as of the time of the NAEP testing – Missouri and South Carolina – experienced greater disruptions than consistent Core states.

Of course more, much deeper analysis of the NAEP data – and more iterations of the test – will be needed to reach any firm conclusions about the Core’s effects. But the disruption theory already seems to be in a bit of trouble.