Yesterday, Nature Geosciences published an article by Richard Millar of the University of Exeter and nine coauthors that states the climate models have been predicting too much warming. Adjusting for this, along with slight increases in emissions reductions by the year 2030 (followed by much more rapid ones after then) leaves total human-induced warming of around 1.5⁰C by 2100, which conveniently is the aspirational warming target in the Paris Accord. Much of it is a lot like material in our 2016 book Lukewarming.

This represents a remarkable turnaround. At the time of Paris, one of the authors, Michael Grubb, said its goals were “simply incompatible with democracy.” Indeed, the apparent impossibility of Paris was seemingly self-evident. What he hadn’t recognized at the time was the reality of “the pause” in warming that began in the late 1990s and ended in 2015. Taking this into consideration changes things.

If Paris is an admitted failure, then the world is simply not going to take their (voluntary, unenforceable) Paris “Contributions” seriously, but Millar’s new result changes things. He told Jeff Tollefson, a reporter for Nature, “For a lot of people, it would probably be easier if the Paris goal was actually impossible. We’re showing that it’s still possible. But the real question is whether we can create the policy action that would actually be required to realize these scenarios.”

Suddenly it’s feasible, if only we will reduce our emissions even more.

Coincidentally, we just had a peer-reviewed paper accepted for publication by the Air and Waste Management Association and it goes Millar et al. one better. It’s called “Reconciliation of Competing Climate Science and Policy Paradigms,” and you can find an advanced copy here.

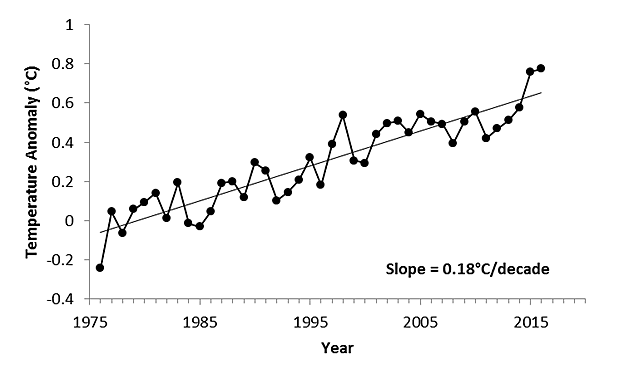

We note the increasing discrepancy between the climate models and reality, but what we do, instead of running a series of new models, is rely upon the mathematical form of observed warming. Since the second warming of the 20th century began in the late 1970s, and despite the “pause,” the rate has been remarkably linear, which is actually simulated by most climate models—they just overestimate the slope of the increase. However, one model, the INM-CM4 model from Russia’s E.M. Volodin, indeed does have the right rate of increase.

Figure 1. Despite the “pause”, the warming beginning in the late 1970s is remarkably linear, which is a general prediction of climate models. The models simply overestimated the rate of increase.

The Paris Agreement erroneously assumes all warming since the early 19th century is caused by greenhouse-gas emissions. That is patently absurd, as the warming of the early 20th century, from 1910–45, can have only little to do with them. If it were caused by them, the warming rate now would be astronomical, because the “sensitivity” of temperature to carbon dioxide would have to be inordinately high. Adjusting for the early warming means that the component from human activity is more likely to be about 0.5⁰ of the 0.9⁰C observed to-date. That leaves around 1.5⁰ more truly “permissible” under Paris.

Climate projections based upon past data tend to not be as hot as the models cited in Millar et al. Taking this into account, along with the fact there will be an economically-driven increasing substitution of natural gas for coal in electrical generation (which results in less than half the carbon dioxide emissions), and we come up with a total of 2.0⁰C of warming by 2100, which is the high-end cap in the Paris Agreement, without any more “commitments” to reductions.

So now the battle lines have been redrawn. One argument is that we can meet Paris but we have to reduce emissions dramatically, especially after 2030, and the other is that we will meet Paris because of economic factors, coupled with an adjustment for the overforecasting of the climate models.